First of all, download and install RivaTuner. It's a great and easy-to-use overclocking utility that will work with a very vast majority of nVidia and ATi graphics cards, and will outperform the utilities provided by the manufacturers. Now when that's covered, let's get to the point.

When you start up RivaTuner, it should look something like this:

You'll see some general information about your setup; Driver info, what card you have, what kind of RAM it has, and so on. To get started with the clocking, click the arrow to the right of "Customize..." on the bar with "ForceWare detected" in the example:

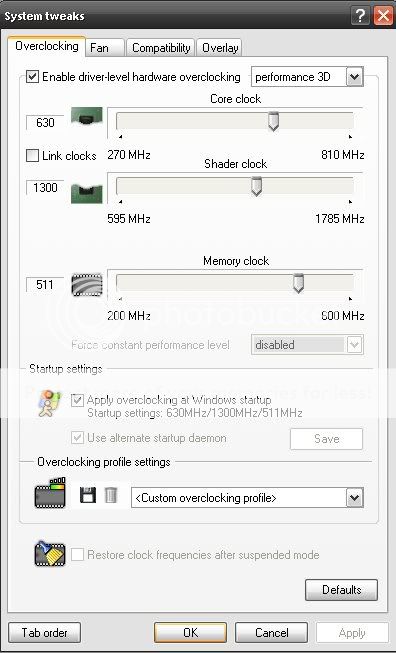

Click the picture of a video card, the one furthest to the left. That will take you to a window looking somewhat like this:

This is the main overclocking window. This is where you do the real overclocking. Now, before you go ahead and do any clocking, you should make sure that your cooler can handle it. Most GeForce 6/7 series cards and ATi X800/X850/X1600/X1800/X1900 series with default/active coolers will be good enough to support some levels of overclocking. You should blow out the cooler with some compressed air or a straw before you go ahead and do anything, however. There's often more dust than you would imagine. Never use a vacuum cleaner to clean out your computer, as it produces massive quantities of static electricity, and WILL damage your components.

I don't have much personal experience with the 8800/HD series cards and their coolers. From what I've done, all I've came to know is that the 320MB G80 8800GTS shouldn't be overclocked with stock cooler . If someone's got some info on this that they think would fit in this guide, please let me know, and I'll add it and give credit.

You should also install Everest or SpeedFan to check your temperatures, or use RivaTuner for that too. Run a 3D app like 3DMark 06 (I strongly recommend it, since it's a very good benchmark to see how effective your clocking has been), and check the temps after a minute or two. If they're over 65-70C, I wouldn't recommend you overclocking your card with the current cooler, as it might run a tad too hot for comfort, which will counteract your overclocking and possibly harm the card. You should get an aftermarket cooler if that's the case, they're usually not that expensive, and will usually provide a cooler and quieter solution.

With that covered, let's go on with actually overclocking your card.

The first thing you need to do is check the "Enable driver-level hardware overclocking"-box at the top of the window. This will unlock the sliders under it, as well as show a drop-down box on the right;

The drop-down box will be set to "Standard 2D" by default, you will need to set it to "Performance 3D" to notice any effect on other things than your desktop, which really needs a lot of graphics performance . Well, Vista does.

Now, we'll get to the trial and error-part of overclocking a video card... Which is, well, overclocking the video card. Depending on the card and cooler, it might be able to clock 400MHz or 100MHz, it is very, very card-specific. In general, lower-end cards clock better than higher-end ones, since they're usually just (basically) downclocked versions of the more powerful cards based on the same core. For instance, the GeForce 6600 will usually clock to around 560MHz, while the 6600GT, which has a stock clock of 500MHz (as opposed to 300MHz on the 6600) usually also limits at about 560MHz. The 7600GS has a stock core frequency of 450MHz and will go up to 600-610MHz, while the 7600GT with 575MHz stock will go to 620-630MHz.

Anyhow, back to the clocking. This is the both hardest and easiest part. It's hard because you need to know what your card can handle, and easy because it's just moving a slider to the right. If you don't know what your card can handle, which you propably don't, we'll have to find out through overclocking in small steps.

We'll start with the core clocking, as it's the one with most impact, and the one that can usually be clocked the most without artifacts. In many cases, the RAM can barely be clocked at all. As is the case with the card in this guide.

I'd recommend that you start by a rather large step, and then continue with smaller steps and running a 3D app in between.

50MHz is usually a pretty good start , since most cards can take it, and it saves time from going in smaller steps right from the start. If you want to be completely on the safe side, you could go in 10-20MHz steps right from the beginning.

After moving the slider, click test, apply, and run a 3D app. I usually run the first test in 3DMark06, looking for artifacts that appear if you clock the card too much, or it runs too hot. (The test button isn't on the example pictures, since RivaTuner can't test my card/driver combination.)

Click for full-size (1024x768)

Artifacts caused by too high memory clock. (Running 500MHz DDR1 at over 600MHz, lulz).

Memory-caused artifacts are usually much more severe than those caused by the GPU. I tried to create some GPU artifacts for reference, but the system would crash before they appeared. They usually consist of faulty models, like giant "spears" sticking out from the ground, or endless holes, unlike the memory-caused ones, which usually consist of texture errors.

After the large first step, and running 3DMarks first test to check for artifacts (And coming out clean), you can keep on going in a couple of (2-3) 20MHz steps, and then 10MHz at a time, running a 3D app after every click on the apply button. Remember to check the temps immedeatly after running the 3D app, so that your card isn't overheating. Modern cards can withstand very high temperatures (Over 100C), but when they run over 80C, I wouldn't make them run any hotter. You don't want to boil water on it.

Sometimes, it might happen that you run out of slider space, but your card has passed that speed fine, and you want to go further. This requires a little tweaking in RivaTuner.

To fix this, close the overclocking window and go to the "Power User" tab and expand RivaTuner/Overclocking/Global, as illustrated in the picture:

Double-click on the grey field to the right of "MaxClockLimit" and enter a number. It doesn't have to be high, as it doesn't represent the clock frequency limiter. 150 is usually more than enough.

This will raise the top to a higher value that is dependent on your card's stock frequencies. Don't know how it counts it, but it will be enough to push the card to it's limits.

Now, with that tweak applied, the overclocking window should look something like this:

It's just to keep on, 10MHz at a time, test, run 3D app, until you get frequencies nad temps that you are happy with. Do the same procedure with the memory, but start off with 10MHz steps, because the memory usually can't be clocked as much as the GPU.

If you have any questions, feel free to contact me, and I'll be glad to help with any problems.

© FREEZER7PRO 2008

Last edited by Freezer7Pro (2008-03-02 03:57:20)