Scorpion0x17 wrote:

Bertster7 wrote:

Scorpion0x17 wrote:

This is just a stupidly bad idea.

For us gamers at least.

Berster's right - Intel almost certainly have the right approach - combined CPU/GPU for low-end cheap systems.

I don't see the point of AMDs high-end solution - I'm planning to probably upgrade my video card to DX10 at some point and that will be the third (or will it be fourth?) video card I've had in this box.

If you buy into a combined CPU/GPU you're just spending extra money on a component you can't take out and upgrade (though I'd assume the GPU part will be disableable).

Hmm... Actually... I could see AMDs approach working if it were for the console market... but otherwise, pointless for PC gamers.

That's not what I meant.

It is extremely likely there will be enormous performance benefits from such a combination. But I feel that the approach AMD appear to be taking will be less profitable.

Oh, ok... Then you're not right...

I doubt the performance benefit will be that big - a decent amount of fast on-board video ram is far more important for performance than any improvement in the communication between the CPU and GPU - there's very little comunication between the two - basically a game says "Here's a bunch of triangles, here's the textures, shaders and so on I want applying to it, please render that for me" - there may then be some data transfer from system memory to video memory (which is why it so important to have plenty on board ('cos then you just put everything in video ram from the start)) - then GPU goes off and renders away - that's it.

That's why I think Intel are going for the low-end low-cost market and AMD could well be going for the console market (where fewer fixed components is better).

Looking more at what they are doing I reckon AMD's plan is crap. All the block diagrams I've seen from AMD seem very basic, simple integration of GPU and CPU on the same die. This, as you rightly say, will not improve performance for the type of dense linear algebra that is currently performed on GPUS. It may, again as you say, have important applications in mobile environments.

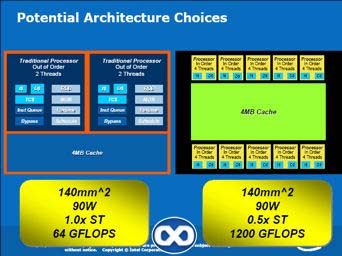

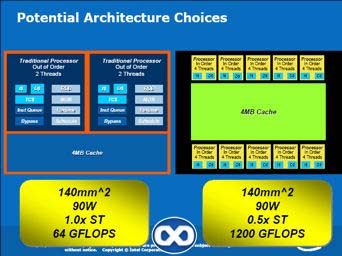

Intel's ideas look far more interesting. In the short term Intel hope to target the low end of the market. Long term Intel are looking at ways of increasing parallelism in graphics and to move to using more irregular algorithms (just as Nvidia are trying to do the opposite with technologies like CUDA - make GPUs more capable of performing more irregular tasks, that is). Intel's plans involve clever use of multiple smaller cores, which would require massive changes to programming techniques to be efficient, yet could increase performance immensely.

Combined with a wide vectored FPU this system could provide stagering performance, though a significant performance decrease should be expected for single threaded code.

Intel predict that graphics will move away from rasterisation and start to be ray traced, which would improve image quality immeasurably, though how they will manage to render ray traced scenes in real time I have no idea - though this type of technology should pave the way for that to be possible.

If Intel are right then they will have obtained a massive lead in pioneering technology for a shift that will make most existing graphics processing techniques fairly redundant and will make them loads of money.